Using find -exec

Find has a useful option -exec which can be used to run a command on each file found. It has a couple of slightly obscure details to the syntax:

-

{}is a placeholder for the filename. - The command we are running with

-execneeds to be terminated with a semicolon as there may be further arguments to find after it. This needs to be escaped as\;or';', otherwise it will be interpreted as the end of thefindcommand.

It is also possible to pass the -exec argument multiple times

Stringing this together allows us to assemble some elegant one-liners, for example:

# delete log files more than 60 days old find ./logs -mtime +60 -name '*.log' -exec rm {} \; # print the name and (array/object) length of all JSON files find . -name '*.json' -exec printf {}\:\ \; -exec jq '. | length' {} \; # recursively change permissions on files (ignore directories) find . -type f -exec chmod 644 {} \;

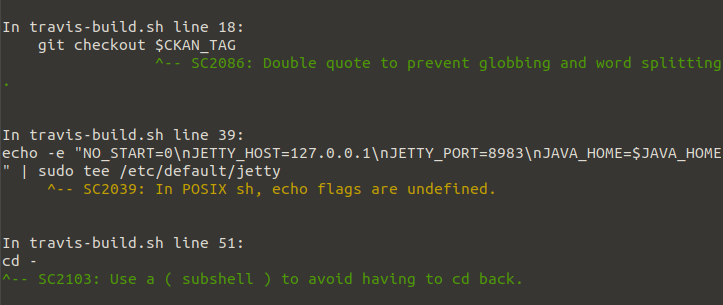

ShellCheck telling me how to fix a shonky bash script

ShellCheck telling me how to fix a shonky bash script